In addition, I do know about at least four different LZ4 decoders for Z80 in fact, I wrote the one that is closest to Pareto frontier on my diagram. None of these are byte-based packers, by the way. Hence, none of these standard solutions fit the described profile. The memory footprint is also not very good. However, the resulting performance is very, very poor. I do know *.arj, *.zip, even *.rar (old versions) can be decompressed on Z80. I would be very surprised if it can be competitive without Huffman on top and, either way, it is definitely would not be fitting my specified memory footprint. How close can the byte-based compressors get to the mixed ones? Are there any (many?) successful examples of such I am pretty sure that ARC used LZW. I can see that LZF would be an example of such technique, it definitely compresses better than LZ4, but still quite far behind even the simplest mixed bit/byte-based LZ77 compressors.

What would you consider to be the current "best" (subjectively, of course) byte-based compression techniques? I tried to search around, but it is not always easy to find explicit information about specific compression methods used. However, my experience tells me that to retain high decompression speeds we pretty much must forget about using bit-based (or mixed bit/byte-based) data streams.

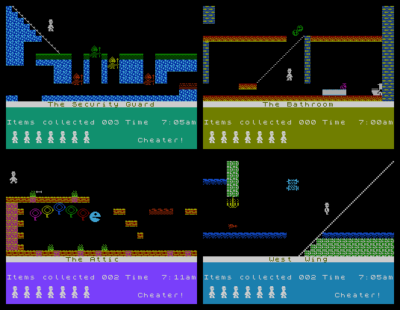

It is very likely to be possible to have compressors with relatively high decompression speeds that will compress significantly better than LZ4. It is therefore clear to me that there is a substantial gap in what is currently available. This is what the result from my tests looks like: If one plots a diagram showing compression ratio vs decompression speed, one can make a guess about Pareto frontier as applied to Z80-based systems, such as ZX Spectrum. For example, there are several ports of LZ4 for Z80. Hence, most of these methods are now being re-discovered, partly due to the reneissance of such methods on PCs. However, if one is interested in achiving high decompression speed, one pretty much has to use byte-based LZ77-type compression techniques, which are mostly forgotten since the 1990s. These techniques are fairly well understood and mature. They have to have well-optimized compressors, which do not need to be particularly efficient, because no-one ever deals with files much larger than ~40Kb. "Hrust 1") or with mechanisms for re-using recent offsets (e.g. Useful decompressors cannot use (large) memory buffers (Exomizer uses ~160 bytes and it is already considered a limitation).īasically, competitive compressors at the top end tend to be sophisticated variations on the LZ77 pattern, e.g. Useful decompressors cannot be too complex (so that they do not occupy too much memory). There are attempts at using arithmetic coders, even LZMA, but they tend to be very narrow in scope, because of the performance issues and memory limitations during decompression.

People tried and implemented compressors using Huffman codes (Exomizer in my survey uses it), but it tends to be useful only at the top end of compression. Historically, the compression on ZX Spectrum went from RLE, to byte-based LZ77-style affairs, to mixed bit/byte-based LZ77-style variations. I wrote a survey of what is available for a Z80 programmer last year you can find it here (if you don't mind that it is in Russian): (being one year old it is not fully up to date anymore, but still mostly relevant). I write programs for ZX Spectrum and compression techniques that we tend to use there are all relatively simplistic. I suppose I have a very special interest.

0 kommentar(er)

0 kommentar(er)